This was a simple experiment to test the benefits of curiosity-driven exploration for autonomous agents. It became a short student paper in the AAAI 2006 conference. The following is the paper's abstract:

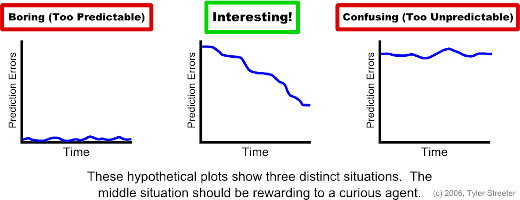

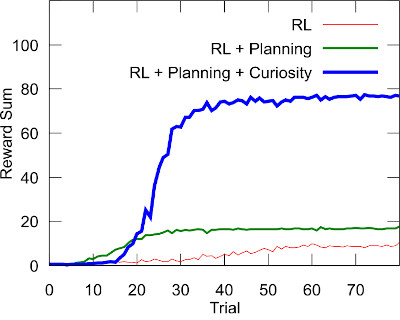

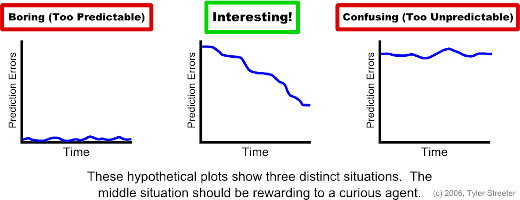

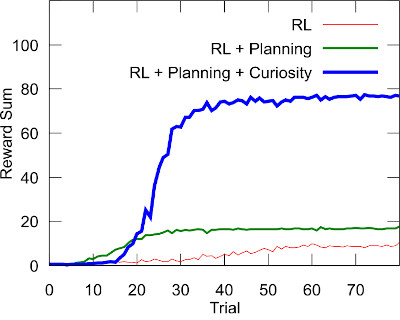

Reinforcement learning (RL) agents can reduce learning time dramatically by planning with learned predictive models. Such planning agents learn to improve their actions using planning trajectories, sequences of imagined interactions with the environment. However, planning agents are not intrinsically driven to improve their predictive models, which is a necessity in complex environments. This problem can be solved by adding a curiosity drive that rewards agents for experiencing novel states. Curiosity acts as a higher form of exploration than simple random action selection schemes because it encourages targeted investigation of interesting situations.

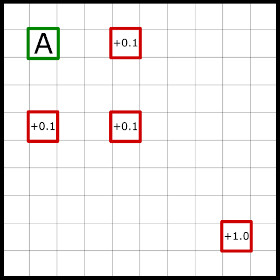

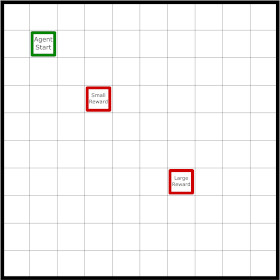

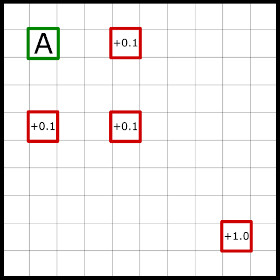

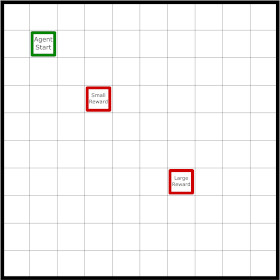

In a task with multiple external rewards, we show that RL agents using uncertainty-limited planning trajectories and intrinsic curiosity rewards outperform non-curious planning agents. The results show that curiosity helps drive planning agents to improve their predictive models by exploring uncertain territory. To the author's knowledge, no previous work has tested the benefits of curiosity with planning trajectories.